Nine years ago, I summed up 2010 as “the year that crowdsourced transcription finally caught on.” This seems like a good time to review the big developments in the field over the decade that 2010 launched.

What the field looked like in 2010

It’s interesting to look back at 2010 to see what’s happened to the projects I thought were major developments:

FamilySearch Indexing remains the largest crowdsourcing transcription project. In the 2010s, they upgraded their technology from a desktop-based indexing program to a web-based indexing tool. Overall numbers are hard to find, but by 2013, volunteers were indexing half a million names per day.

In 2010, the Zooniverse citizen science platform had just launched its first transcription project, Old Weather. This was a success in multiple ways:

- The OldWeather project completed its initial transcription goals, proving that a platform and volunteer base which started with image classification tasks could transition into text-heavy tasks. OldWeather is now on its third phase of data gathering.

- Zooniverse was able to use the lessons learned in this project to launch new transcription projects like Measuring the ANZACs, AnnoTate, and Shakespeare’s World. They were eventually able to include this type of crowdsourcing task in their Zooniverse Project Builder toolset.

- The Scribe software Zooniverse developed for OldWeather was released as open source and–after a collaboration with NYPL Labs–became the go-to tool for structured data transcription projects in middle part of the decade, adopted by University of California-Davis, Yale Digital Humanities Lab, and others.

The North American Bird Phenology Program at the Patuxent Wildlife Research Center was apparently completed in 2016, transcribing nearly a million birdwatcher observation cards. Unfortunately, its digital presence seems to have been removed with the retirement of the project, so its fantastic archive of project newsletters is no longer available to serve as a model.

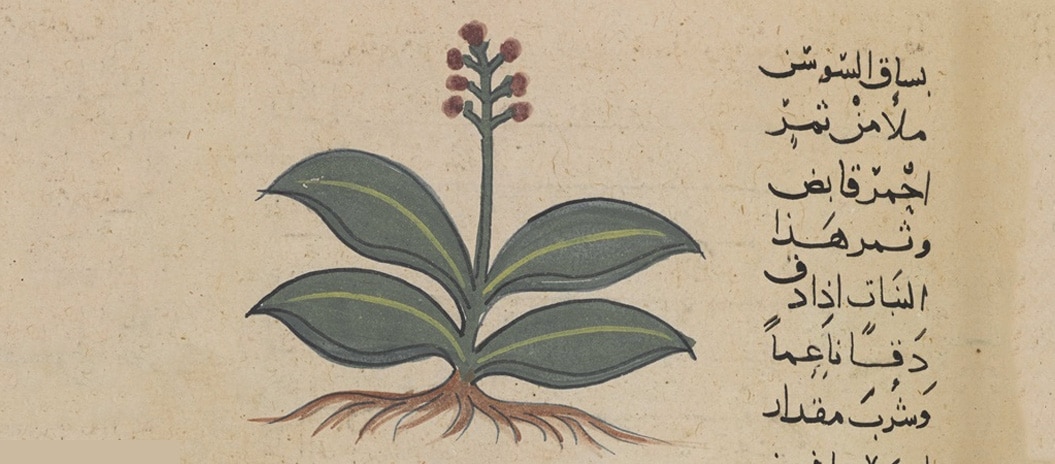

Our own open-source FromThePage software has been deployed at university libraries and botanical gardens around the world and is used on materials ranging from Arabic scientific manuscripts to Aztec codices. Statistics are hard to come by for a distributed project, but the FromThePage.com site currently shows 455,846 transcribed pages, which does not include the 100,000+ pages for projects removed after completion.

Transcribe Bentham has continued, reaching 22,740 pages transcribed in December 2019. The team continues to produce cutting-edge research about crowdsourcing and ways that crowdsourced transcription can provide ground-truth data for machine learning projects.

Developments in the 2010s

What were the major developments during the 2010s?

Maturity

In 2010, crowdsourcing was considered an experiment. Now, it’s a standard part of library infrastructure: seven state archives run transcription and indexing projects on FromThePage, and some (like the Library of Virginia) run additional projects on other platforms simultaneously. The British Library, Newberry, Smithsonian Institution, and Europeana all run crowdsourcing initiatives. Crowdsourcing platforms integrate with content management systems (like FromThePage with CONTENTdm or Madoc with Omeka S) and crowdsourcing capabilities are built directly into digital library systems like Goobi. Library schools are assigning projects on crowdsourcing to future archivists and librarians.

Perhaps the best evidence for the maturity of the methodology is this announcement by Kate Zwaard, Director of Digital Strategy at the Library of Congress, writing in the December 2019 LC Labs newsletter:

This time of transition of seasons also brings a new beginning for By the People. It is graduating from pilot to program and moving, along with program community managers, to a new home in the Digital Content Management Section of the Library. LC Labs incubated and nurtured By the People as an experiment in engaging users with our collections. Success, though, means it must move as it transitions to a permanent place in the Library.

The By the People crowdsourcing platform had started as an experiment within LC Labs, was built up under that umbrella by able veterans of previous projects, and is now simply Library of Congress infrastructure.

Cross-pollination

One of the ways the field matures is through personnel moving organizations, bringing their expertise to new institutions with different source materials and teams. We’ve seen a number of early pioneers in crowdsourcing find positions in institutions that hired them for that experience. Those institutions’ ability to commit resources to crowdsourcing projects and the crowdsourcing veterans’ discoveries as they work in different environments or with different materials have combined to push the methodology beyond the fundamentals.

This may seem a bit vague, since I don’t want to talk about particular colleagues’ careers by name, but it’s one of the things that suggests a vibrant future for crowdsourcing.

The rise of cloud platforms

In 2010, institutions that wanted to run crowdsourcing projects either had to develop their own software from scratch or install one of only a handful of tools on their own servers. At the end of the decade, cloud hosting exists for most types of tasks, making crowdsourcing infrastructure available for institutions of all size and even individual editors

- FromThePage.com supports free-text and structured text transcription with plans for individuals, small organizations, and large institutions.

- Zooniverse Project Builder supports image classification and structured transcription, and is free to use.

- SciFabric runs projects using the PyBossa framework, with small and large organization plans.

- Kindex supports free-text and structured transcription for individual genealogists.

- HJournals supports transcription and indexing linked to FamilySearch trees for genealogists.

Emerging consensus on “free labor” and ethics

I’m happy to see that the most exuberant aspiration (and fear) about crowdsourcing has largely disappeared from our conversations with institutions. I’m talking about the idea that scholarly editors or professional staff at libraries and archives can be replaced by a crowd of volunteers who will do the same work for free. Decision-makers seem to understand that crowdsourced tasks are different in nature from most professional work and that crowdsourcing projects cannot succeed without guidance, support, and intervention by staff.

Practitioners also continue to discuss ethics in our work. Current questions focus on balancing credit and privacy for volunteers, appropriate access to culturally sensitive material, and concern about immersing volunteers in archives of violence. One really encouraging development has been the use of crowdsourcing projects to advance communities’ understanding of their history, as with the Sewanee Project on Slavery, Race and Reconciliation or the Julian Bond Transcribathon.

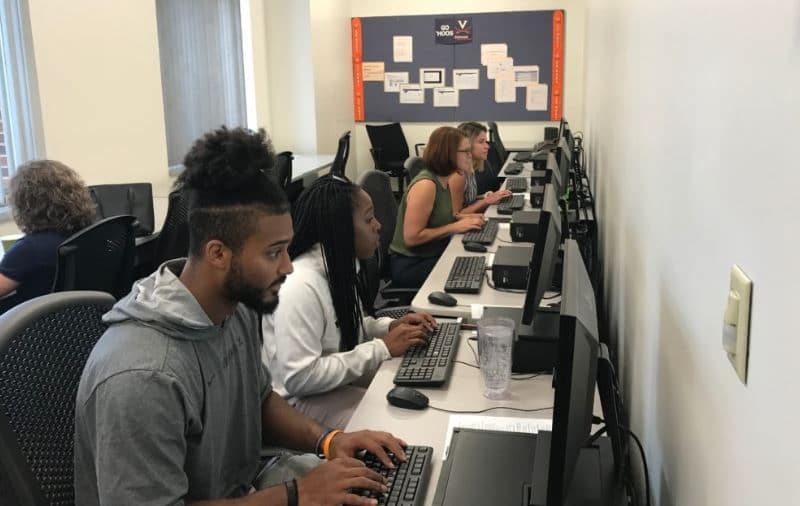

Transcribathons

The first transcribathon–and the first use of the word–was by the Folger Shakespeare Library in 2014. Since then, they’ve become a popular way to kick off projects and get volunteers over the hurdle of their first transcriptions. Transcribathons are also an opportunity for collaboration: the Frederick Douglass Day transcribathons have partnered with the Colored Conventions Project, University of Texas-Austin and the New Orleans Jazz Museum worked together on Spanish and French colonial documents, and Kansas State hosted a satellite transcribathon for the Library of Congress’s suffragists letters. Some transcribathons last just an afternoon, others, like WeDigBio, span four days. The Julian Bond transcribathons have become annual events while the Library of Virginia hosts a transcribathon every month for local volunteers.

Directions for the 2020s

What’s ahead in the next decade?

Quality control

Quality control methodologies remain a hot topic, as wiki-like platforms like FromThePage experiment with assigned review and double-keying platforms like Zooniverse experiment with collaborative approaches. This remains a challenge not because volunteers don’t produce good work, but because quality control methods require projects to carefully balance volunteer effort, staff labor, suitability to the material, and usefulness of results.

Unruly materials

I think we’ll also see more progress on tools for source material that is currently poorly served. Free-text tools like FromThePage now support structured data (i.e. form based) transcription and structured transcription tools like Zooniverse can support free text, so there has been some progress on this front during the last few years. Despite that progress, tabular documents like ledgers or census records remain hard to transcribe in a scalable way. Audio transcription seems like an obvious next step for many platforms — the Smithsonian Transcription Center already begun with TC Sound. Linking transcribed text to linked data resources will require new user interfaces and complex data flows. Finally, while OCR correction seems like it should be a solved problem (and is for Trove), it continues to present massive challenges in layout analysis for newspapers and volunteer motivation for everything else.

Artificial intelligence

The Transcribe Bentham team has led the way on integrating crowdsourced transcription with handwritten text recognition as part of the READ project, and the Transkribus HTR platform has built a crowdsourcing component into their software. That’s solid progress towards integrating AI techniques with crowdsourcing, but we can expect a lot more flux this decade as the boundaries shift between the kinds of tasks computers do well and those which only humans can do. One of the biggest challenges is to find ways to use machine learning to make humans more productive without replacing or demotivating them if experience with OCR is any indication.

0 Comments