AI (Artificial Intelligence) is at the heart of the most disruptive innovation since the Industrial Revolution – the current Knowledge Revolution. It can potentially increase the speed, efficiency, and effectiveness of public and private stakeholders’ actions in tackling the intricate global challenges encompassed by the UN’s Sustainable Development Goals (AI for Good). However, what can be done about low levels of trust in AI systems, which are primarily due to ethical reasons?

Trust is one of the most important keys to success in doing business and providing value to users. Yet AI remains the most-heavily debated technology when it comes to ethical concerns and trust-related issues. If we want AI systems to be widely used, we have to build ethical and inclusive AI systems.

Why AI systems may not be ethical and inclusive?

The most widely used AI algorithms are basically codes that try to identify patterns in a large amount of data. The codes are written by engineers and as a consequence, AI systems are characterized by inseparable factors. They:

- Reflect the manner in which the problem-statement is designed.

- Reflect the values and bias of their designers, as well as their knowledge. Correlatively, it thus also reflects their likely lack of knowledge on human-centered designs, international public policies, human rights, ethics, and any other related areas.

- Exclude usually marginalized populations from the data processing of information because of (a) the lack of access, and/or (b) the lack of visibility, and/or (c) the lack of data about those populations, hence ‘giving standing to some (often unspecified) portion of humanity’ but also implying to choose ‘which antisocial people, if any, to exclude from the social choice process’ (Baum, 2017).

- Reproduce endemic sociological and historical bias, and other inequalities that exist in society. AI does not challenge the status quo unless the process is purposely designed to do so.

This is also embedded with a set of commonly agreed ethical risks, such as:

- Misuse of technology detrimental to Society.

- Lack of transparency of the algorithms used (open data).

- Possibly negative social impact, on a global scale. One of the major socio-economic risks is that inequality becomes systemic.

- Lack of regulatory and legal framework supporting innovation, R&D while setting practical ethical rules to be implanted within any innovation lab or entities developing AI-based projects.

- Lack of representation of all end-users, and

- Unintended consequences – the unforeseen and possibly damaging effects.

If we look closely at these two sets of factors, the lack of diversity stands out as a common denominator to reproduce similar thinking, to not foresee threats and opportunities outside a comfort zone or way of thinking, to not leave a comfort zone, and to take the easy way out towards a known solution.

How to build ethical and inclusive AI?

AI is cross-cutting by nature. It requires the involvement of designers, implementers (beneficiaries/end-users, grass-root organizations, national entities, international organizations…), funders and donors, and legal professionals.

From a talent management perspective, this inter-disciplinarity could be maximized by a diversity policy aimed at gathering a pool of diverse talents coming from different backgrounds, socio-economic groups, sectors, and regions, and therefore reflecting a variety of understanding and opinions.

From a process design perspective, AI’s applications for Good are also the opportunity to generate out-of-the-box ideas and solutions through, and thanks to, a collaborative process.

Considering the tremendous impact that most of today’s AI solutions have on people and society, it would be detrimental to build solutions in isolation from the people and social circumstances that make them necessary in the first place.

Collaboration among varied talents enables us to bridge gaps in understanding between different mindsets and unite people and values. It therefore helps to share knowledge and to seed core concepts and principles within other professions e.g. human rights and ethical concerns within an ecosystem of engineers or data collection processes within a legal ecosystem.

Collaborative AI means harnessing crowd wisdom, diversity, and inclusion united in a single project community. Solutions that emerge from such collaborations share common values, beliefs, and often a bigger vision that serves the long-term interests of those communities.

It all sounds good in theory, but is it possible in practice.

Building an Ethical and Inclusive AI: an illustration of a bottom-up collaboration.

There are various forms of organizational structures (accelerators, advisors, labs) that have been chosen to promote crowd wisdom, diversity, and inclusion from the ideation to the scaling-up phases, combining both bottom-up and top-down approaches to promote talents.

The Omdena platform has chosen to create a bottom-up collaboration. To date, over 900 data scientists, data engineers, and domain experts from 79 countries have taken part in Omdena projects. Omdena uses the following three steps to execute projects.

The Omdena platform has chosen to create a bottom-up collaboration. To date, over 900 data scientists, data engineers, and domain experts from 79 countries have taken part in Omdena projects. Omdena uses the following three steps to execute projects.

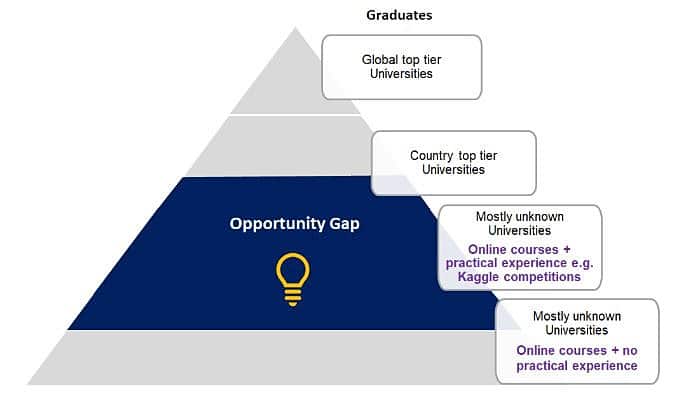

Step 1. Involve different groups from the middle of the talent pyramid with a focus on women.

Most technology companies focus on hiring people at the top of the talent pyramid, where there are fewer women. Many talented women are hidden in the middle of the pyramid, educating themselves through online courses, though lack opportunities and encouragement.

Talent Pyramid

Step 2. Build a communal and collaborative bottom-up team with different stakeholders

Next, form inclusive and gender-balanced project communities with different stakeholders that collaborate to build AI products based on common values, beliefs, and often a bigger vision.

Step 3. Create a self-organized structure for collaboration

The right organizational structures and practices empower all stakeholders by connecting intrinsic and extrinsic motivations (which are not related to money) and create an incentive structure where collaborative leadership is fostered by leaders. This organizational structure decreases the need to control people and provides opportunities to learn and grow together. Collaborators self-organize themselves and end up creating a self-organized learning environment (SOLE).

Have you experienced or witnessed any institutionalized bias in AI applications? Please share your insights with us.

This article was co-authored by Virginie Martins de Nobrega. Virginie founded CREATIVE RESOLUTION in 2016 to engage as an actor of change, while practising as a Registered Lawyer and a Mediator. In 2018, research was conducted on the risks and opportunities of sustainability-oriented innovation and AI applications to leverage the impact, speed, and efficiency of public policies and initiatives in relation with the SDG with a focus on the United Nations system (Innovation Lab).

This article was co-authored by Virginie Martins de Nobrega. Virginie founded CREATIVE RESOLUTION in 2016 to engage as an actor of change, while practising as a Registered Lawyer and a Mediator. In 2018, research was conducted on the risks and opportunities of sustainability-oriented innovation and AI applications to leverage the impact, speed, and efficiency of public policies and initiatives in relation with the SDG with a focus on the United Nations system (Innovation Lab).

CREATIVE RESOLUTION thus organically broadened its area of expertise to governance, applied ethics, and applied human-rights to innovations and applications of Artificial Intelligence for the sustainable development goals (SDGs) « AI for GOOD ».

0 Comments