Data structures are essential for organizing and representing data in a way that allows for efficient storage, retrieval, and processing. Data annotation can be immensely helpful in various aspects of data structures, especially when dealing with large datasets that require manual labeling or categorization. Crowdsourced data annotation involves outsourcing the task of data labeling and annotation to a large group of non-employee individuals, often through online platforms, enabling them to collectively contribute their knowledge and expertise to complete the work.

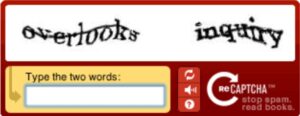

Dataset annotation can include tasks such as tagging images with relevant labels, transcribing audio recordings, or even more complex tasks such as identifying specific objects or features within an image or video. Many of us have been involved  in crowdsourced data annotation without even realizing, through the Captcha “prove you are human” requirements to access online content. The Captcha security tool doubles up as a crowdsourcing tool. It uses the typed-in transcriptions we all provide to create accurate digital records of hard-to-read text in old books and magazines. The term CAPTCHA (Completely Automated Public Turing Test To Tell Computers and Humans Apart) was coined in 2000 by a team including Luis von Ahn, an acknowledged crowdsourcing leader, who went on to found the online language learning platform Duolingo.

in crowdsourced data annotation without even realizing, through the Captcha “prove you are human” requirements to access online content. The Captcha security tool doubles up as a crowdsourcing tool. It uses the typed-in transcriptions we all provide to create accurate digital records of hard-to-read text in old books and magazines. The term CAPTCHA (Completely Automated Public Turing Test To Tell Computers and Humans Apart) was coined in 2000 by a team including Luis von Ahn, an acknowledged crowdsourcing leader, who went on to found the online language learning platform Duolingo.

Benefits of crowdsourced data annotation

The use of much more widely crowdsourced data annotation can be beneficial for a number of reasons. It is particularly useful when datasets become too large and time-consuming for internal resources. As an overview, there are three key benefits from using crowdsourcing:

- it allows for the rapid annotation of large datasets, which can be particularly useful for training machine learning models.

- it can be a cost-effective solution as it allows organizations to outsource the annotation process to a large number of people, rather than hiring a dedicated team of annotators.

- it can help to reduce bias and increase the diversity of the data, as it allows for multiple perspectives to be represented in the annotation process. This can be particularly important for tasks such as image recognition, where the performance of a model can be greatly impacted by the diversity of the training data.

Here’s more detail on how crowdsourced data annotation can benefit data structures:

- Data Labeling and Categorization: Crowdsourcing can be used to assign labels or categories to unstructured data, making it easier to organize and analyze. For example, crowdsourcing can be utilized to label images, videos, or text documents for training machine learning models or building hierarchical taxonomies.

- Data Validation and Quality Control: Crowdsourcing can help validate the correctness of existing data structures. It allows multiple annotators to review and verify the data, reducing the chances of errors and inconsistencies. The collective intelligence of the crowd helps identify and correct mistakes in the dataset.

- Data Augmentation: In some cases, data structures need to be expanded to improve the robustness and generalization of machine learning models. Crowdsourcing can be employed to augment existing datasets by generating new examples or variations of the data.

- Ontology and Taxonomy Development: Building ontologies or taxonomies is crucial for organizing data into hierarchical structures with well-defined relationships. Crowdsourcing can be used to create and refine these structures by collectively defining concepts and their interconnections.

- Entity Recognition and Extraction: Data structures often involve identifying entities (e.g., names, locations, dates) and extracting relevant information. Crowdsourcing can help annotate and extract such entities from large text corpora or documents.

- Semantic Annotation: Crowdsourcing can aid in providing semantic meaning to the data elements, enabling better comprehension and analysis of the data. For example, annotating the sentiment of text data or emotions in images.

- Data Preprocessing: Before utilizing data for specific tasks, it often needs preprocessing and transformation into a standardized format. Crowdsourcing can assist in these data preparation tasks, making the data suitable for further analysis.

- Multi-Modal Data Structures: Some datasets contain multiple types of data, such as text, images, and audio. Crowdsourcing can help annotate and organize these diverse data types into a cohesive multi-modal data structure.

Leading crowdsourcing data annotation platforms

Many leading providers of crowdsourcing data annotation operate on a global basis, though some geographic strengths remain due to their point of origin. Where they were founded may no longer be where they conduct most of their business.

- Alegion is headquartered in the US, and also operates in Europe. It provides a comprehensive data labeling and annotation platform that caters to machine learning and AI needs.

- Amazon Mechanical Turk (MTurk) is one of the oldest and most well-known crowdsourcing platforms. It allows businesses to post tasks, including data annotation, to a pool of distributed workers who complete these tasks for a fee.

- Labelbox was founded in early 2018 to empower organizations building the Al solutions that will drive the next generation of products and services. It provides a collaborative data annotation platform that enables teams to create, manage, and iterate on labeled data for machine learning projects. It also offers a user-friendly interface and supports various annotation types.

- Whilst the Appen company’s global headquarters is in Sydney, Australia, the United States headquarters is in Kirkland, Washington, a suburb of Seattle. There are also US offices in San Francisco, California and Detroit, Michigan. Appen is a global leader in data annotation and crowd-based AI services. It offers data collection, transcription, and annotation services, catering to machine learning and artificial intelligence applications.

- LXT is an emerging leader in AI training data to power intelligent technology for global organizations. In partnership with its international network of crowdsourced contributors, LXT collects and annotates data across multiple modalities with the speed, scale and agility required by the enterprise. Their global expertise spans more than 145 countries and over 1,000 language locales. Founded in 2010, LXT is headquartered in Canada with presence in the United States, UK, Egypt, India, Turkey and Australia. The company serves customers in North America, Europe, Asia Pacific and the Middle East.

- Toloka is a global platform founded in 2014, and based in Lucerne, Switzerland. It is owned by Yandex, which has its HQ in Moscow, Russia. Toloka is strong in Russia, and also has a substantial user base across Asia. It offers data annotation and labeling services for various tasks, including image and text classification.

To sum up, crowdsourcing data annotation brings together the collective effort of many individuals, which can lead to faster and more cost-effective data processing. However, it is essential to carefully manage the crowdsourcing process to ensure the quality and reliability of the annotated data. This may involve using redundancy, consensus mechanisms, and quality control measures to address issues like noise and bias in the annotations.

BOLD Awards 5th Edition

Crowdsourcing is one of 33 digital industry award categories in the 5th edition of BOLD Awards. Each  entry can be submitted in up to three categories. All entries can be returned to and amended as often as required up to December 31st, 2023. However, the fee for processing applications will rise as we near the cut-off date, so we advise that you enter now.

entry can be submitted in up to three categories. All entries can be returned to and amended as often as required up to December 31st, 2023. However, the fee for processing applications will rise as we near the cut-off date, so we advise that you enter now.

There will be a round of public voting in January 2024 to create candidate shortlists for each category, which will then be assessed by an international panel of judges. Category winners will be announced at a gala dinner ceremony held at the H-FARM campus just outside Venice, Italy, in March 2024.

0 Comments